As a public techno service here are some of the recently released machine learning devices available.

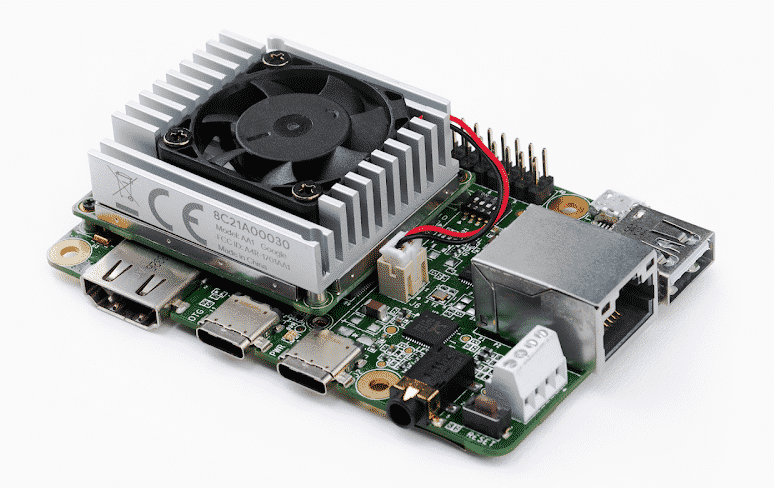

Say “Hello” to Google Coral

Edge TPU, Google’s custom ASIC for machine learning has arrived.

During Injong Rhee’s keynote at last year’s Google Next conference in San Francisco, Google announced two new upcoming hardware products: a development board and a USB accelerator stick. Both products were built around Google’s Edge TPU, their purpose-built ASIC designed to run machine learning inference at the edge.

Almost a year on, the hardware silently launched “into Beta” under the name “Coral” earlier today, and both the development board and the USB accelerator are now available for purchase. The new hardware will officially be announced during the TensorFlow Dev Summit later this week.

Comment

All of the compute platforms discussed are inference processors designed to effectively execute (use not train) neural networks. Self-service applications might include speech recognition, speech synthesis, language understanding and processing, customer support chat bots, optical recognition, scanning, counting, tracking, sentiment analysis, advertising optimization, etc… Imagine engineers train a neural network in the lab for some purpose then want to deploy it into stand-alone hardware devices that can’t afford to rely on connectivity and cloud computing. (eg: battery powered devices, smart cameras, smart sensors, autonomous platforms, alarms, etc…) While limited compared to large systems, these small new inference-oriented processors are suitable for certain AI deployments on the edge (translation: local inference tasks).

Arm Helium adds new extension providing up to 15 times performance uplift to machine learning (ML) functions, and up to 5 times uplift to signal processing functions.